In one of recent project, we need to build a C++ HTTP Server with several REST logic. Why we use C++ other than other languages or frameworks? Simple reason is that it is the best language I master and is the fast way to build a system like this.

So for C++, which library or framework should I use to build this system? There is boost library, but it is too heavy I think. So I want to find a light one, and that is POCO C++ LIBRARIES.

POCO Libraries

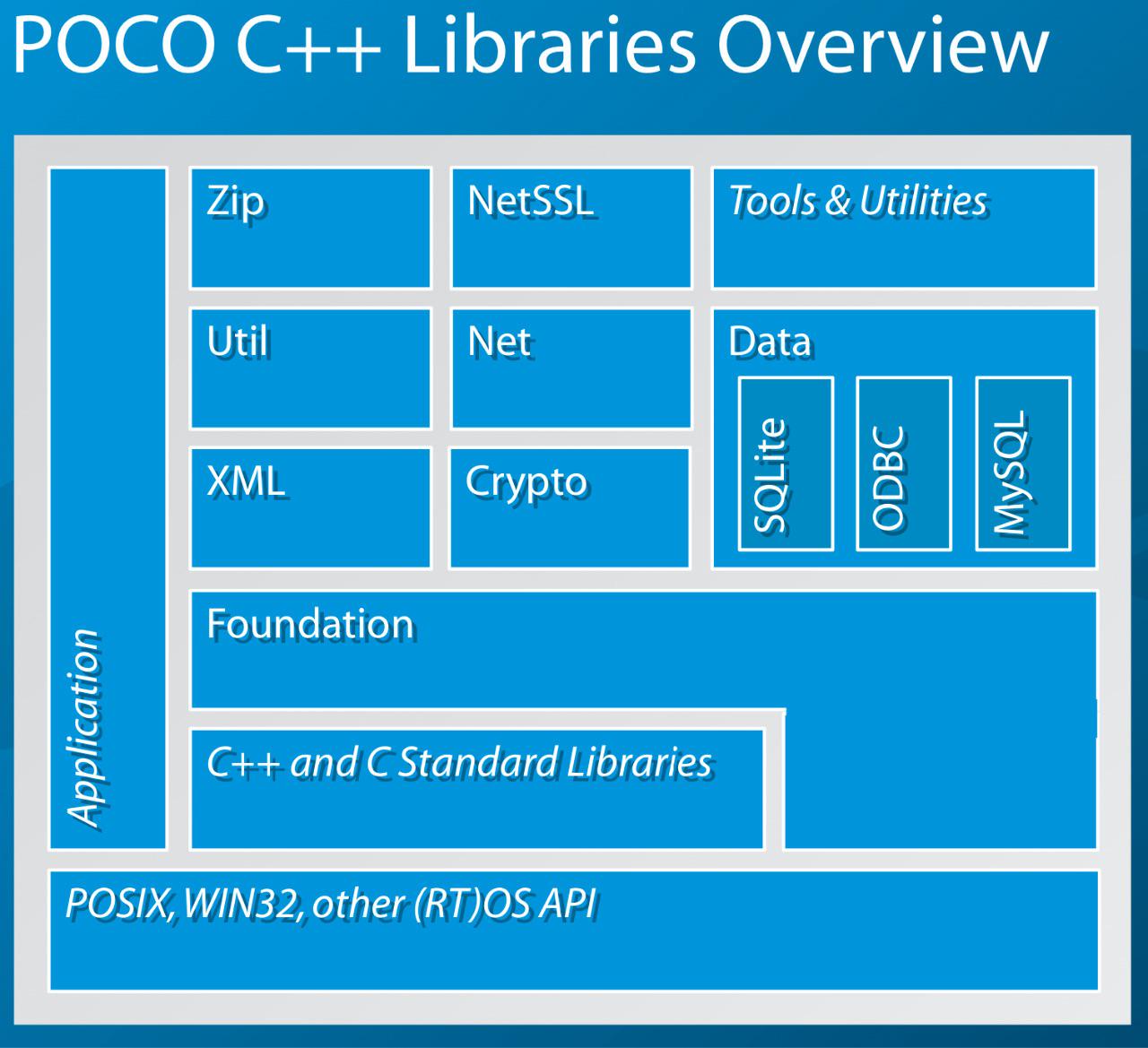

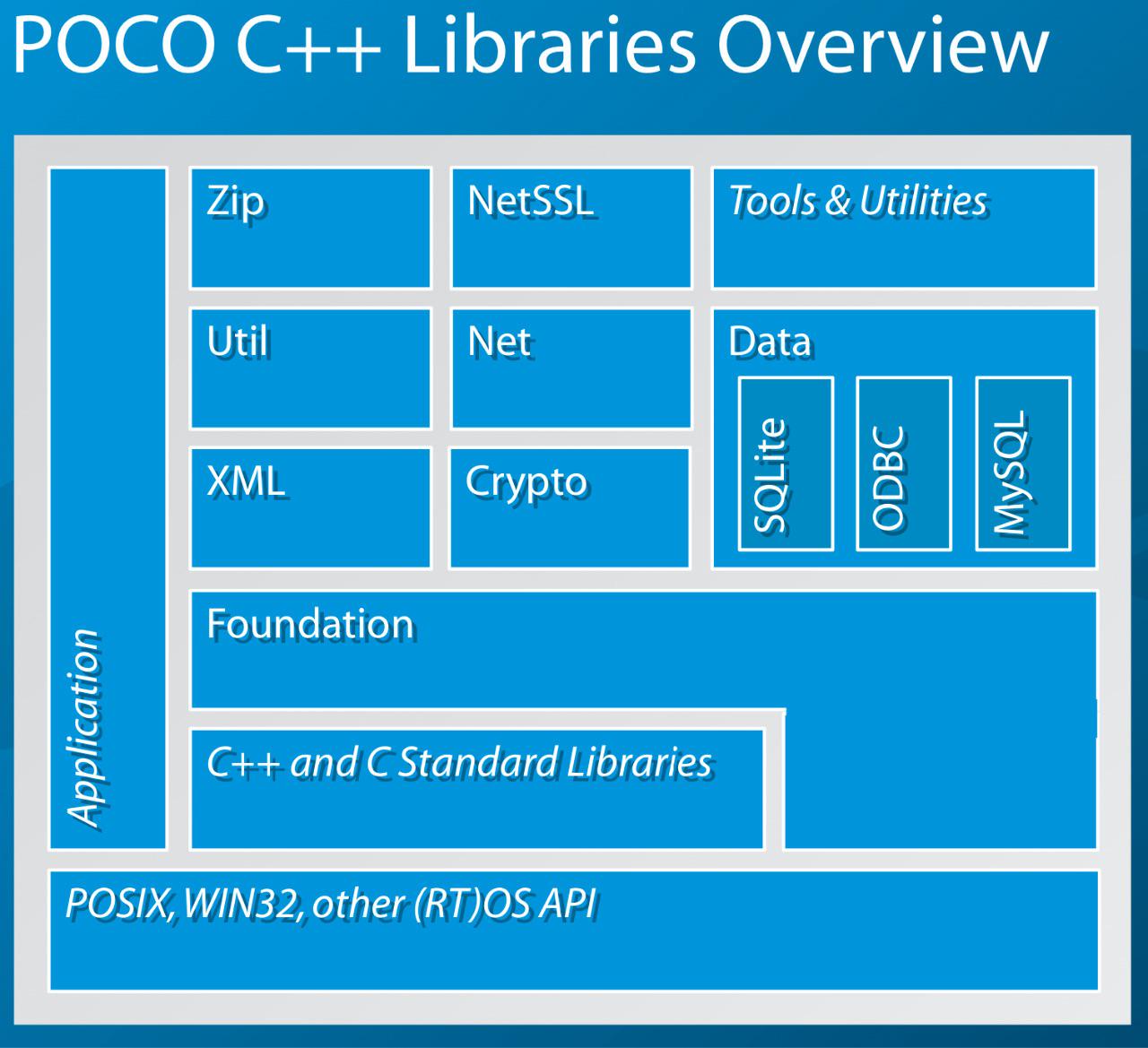

Here is a overview diagram for POCO:

It has basic libraries for STL like, and also some libraries like XML/JSON, and Net, Databse, etc.

For my own perpose, POCO has one HTTPServer class, which is very easy to setup one HTTP server.

// first, you declare one class which based on HTTPRequestHandler

// and implement the handleRequest function

class SimpleRequestHandler: public HTTPRequestHandler

{

public:

void handleRequest(HTTPServerRequest& request, HTTPServerResponse& response) {

// implement code

}

};

// second create one factory class to create handlers

class SimpleRequestHandlerFactory: public HTTPRequestHandlerFactory

{

public:

HTTPRequestHandler* createRequestHandler(const HTTPServerRequest& request) {

if (request.getURI() == "/hello")

return new SimpleRequestHandler;

}

// and then for create the HTTP Server

auto ip = config().getString("listen.ip", "0.0.0.0");

auto port = (unsigned short)config().getInt("listen.port", 9980);

PocoSocketAddress addr(ip, port);

int maxQueued = config().getInt("maxQueued", 1000);

int maxThreads = config().getInt("maxThreads", 30);

ThreadPool::defaultPool().addCapacity(maxThreads);

HTTPServerParams* pParams = new HTTPServerParams;

pParams->setKeepAlive(true);

pParams->setKeepAliveTimeout(Timespan(1 * Timespan::MINUTES + 15 * Timespan::SECONDS)); // 75s

pParams->setMaxQueued(maxQueued);

pParams->setMaxThreads(maxThreads);

PocoServerSocket svs(addr); // set-up a server socket

PocoHTTPServer srv(new SimpleRequestHandlerFactory(), svs,

pParams); // set-up a HTTPServer instance

srv.start(); // start the HTTPServer

waitForTerminationRequest(); // wait for CTRL-C or kill

srv.stop(); // Stop the HTTPServer

The above code works well with only one problem, which is that the server is thread pool based, which means is not very high performance.

POCO has SocketReactor class is like this but that is only for TCP, not the one you can use directly.

So, then we found Proxygen.

Proxygen

Simple introduction for proxygen is that it is HTTP Server libraries from facebook.

The first reason for use proxygen is that the HTTP Server class has the very like interface with POCO, like this:

auto port = (unsigned short)conf.getInt("listen.port", 9980);

auto ip = config().getString("listen.ip", "0.0.0.0");

auto threads = config().getInt("thrnum", 0);

if (threads <= 0) {

threads = sysconf(_SC_NPROCESSORS_ONLN);

}

std::vector<proxygen::HTTPServer::IPConfig> IPs = {

{FollySocketAddress(ip, port, true), Protocol::HTTP},

};

HTTPServerOptions options;

options.threads = static_cast<size_t>(threads);

options.idleTimeout = std::chrono::milliseconds(60000);

options.shutdownOn = {SIGINT, SIGTERM};

options.enableContentCompression = false;

options.handlerFactories = RequestHandlerChain().addThen<NewHandlerFactory>().build();

options.h2cEnabled = false;

proxygen::HTTPServer server(std::move(options));

server.bind(IPs);

server.start(); // block call

And the second is that, it metions websocket support introduction article.

While, at this time, it seems that chosen for proxygen is not a proper good idea.

Because it seems that they dropped the support for this or only use internally, and was not open sourced. There are several pull requests on the github page, may years ago and were not accepted. It seems that facebook has not maintain this project for a long time.

Besides the no websocket support, there is another problem for proxygen, that is the dependencies. It has a large dependencies, such as libevent, boost(several libraries, not all), double-conversion, gflags, glog, facebook folly, and facebook wangle.

folly

Facebook Folly is an open-source C++ library from facebook, and it contains a variety of core library components, likes POCO.

The feature we use mostly is folly future. It is based on the concept of promise and future in C++11, and extended with non-blocking then continuations.

Since proxygen is an async mode, all the action in http sever is non-blocking. The easy way for async is that you use many callbacks. The direct advantage for folly future is that you can use then expression to avoid callbacks, like this:

Future<Unit> fut3 = std::move(fut2)

.thenValue([](string str) {

cout << str << endl;

})

.thenTry([](folly::Try<string> strTry) {

cout << strTry.value() << endl;

})

.thenError(folly::tag_t<std::exception>{}, [](std::exception const& e) {

cerr << e.what() << endl;

});

Though folly future works well, but there is one big problem which is the stack level. If your application core dumps, and you want to use gdb to find the error, you will see there are many level of frames in the stack, and the debugging procedure is not a happy journey.

#c++ #poco #proxygen #folly #future